The $880 Million Lesson

Let’s go back to 2021. The housing market is on fire, prices are only going up, and boomers can’t help but pat themselves on the back.

The real estate platform, Zillow, was at the center of the story. It had spent years perfecting its ‘Zestimate’ home valuation algorithm, which was trusted by millions of homebuyers and sellers as the industry benchmark.

They had so much insight, they decided to leverage their data and to buy and flip houses at scale.

The model performed well in normal conditions, but when Zillow scaled up they became a market maker – their own buying activity started influencing the prices their model was trying to predict. A feedback loop the algorithm never accounted for.

Even when predictions were right, the company couldn’t keep up: they lacked local expertise, renovation capacity and supply chain logistics to actually execute on the model’s recommendations.

The technology worked but the system around it didn’t; Zillow ultimately lost over $880 million and shut down the entire operation.

This pattern hides behind most AI transformation failures. Leaders assume the hard part is building the model when the actual hard part is redesigning the organization to use it.

Systems Thinking for AI Leaders

Systems thinking emerged from Jay Forrester’s work at MIT in the 1950s

Most executives approach transformation assuming that AI implementation leads to adoption which leads to results. A straight line from investment to outcome.

But organizations are dense webs of feedback loops, delayed consequences, and interdependencies that nobody fully sees. Change one thing and you change twelve others – some immediately, some months later, some in ways that look completely unrelated.

Systems thinking is the discipline of seeing these patterns. A few concepts are particularly relevant here:

Feedback loops either amplify change (with both positive and negative reinforcement) or dampen it (like a thermostat maintaining temperature). Your organization is full of these reinforcing and balancing loops, which constantly interact in nonlinear ways.

Delays separate actions from consequences. A decision that looks brilliant today might create problems six months from now. The gap between cause and effect makes it easy to misattribute both successes and failures.

Local optimization often undermines global performance. When you optimize one part of the system you can hurt the whole – a team hits its targets while undermining the broader goal, a department automates its workflow and creates a bottleneck downstream.

Three System Failures That Kill AI Initiatives

If you dig into failed AI programs, the same patterns appear again and again. They’re not technology failures – they’re system failures. Here are the three most common.

AI Amplifies Dysfunction

AI doesn’t fix broken processes, it scales them.

When Amazon tried to automate recruiting, their AI was trained on a decade of historical hiring data that reflected an overwhelmingly male technical workforce.

The algorithm didn’t correct for this bias — it learned from it. Resumes containing the word “women’s” were penalized and graduates from women’s colleges were downgraded.

Amazon’s hiring process wasn’t neutral before the AI arrived. The algorithm just made the existing bias faster, more consistent and harder to catch.

If your data reflects a broken system, AI will scale that brokenness with ruthless efficiency.

Insight Without Action

Sometimes the AI works perfectly and nobody does anything about it.

Picture a predictive maintenance AI that can flag equipment failures days in advance. Although the model works, maintenance schedules were never restructured to respond to predictions. Parts ordering isn’t connected to the alerts. Nobody owns the handoff between “AI says this will break” and “human fixes it.” The insight exists – the business just can’t metabolize it.

The gap between AI output and organizational action is where most initiatives quietly die.

Fixes That Fail

This is a classic pattern from systems thinking: a quick fix addresses a symptom while the underlying problem remains – and often worsens because the fix reduces pressure to address it properly.

AI transformations are full of these. A company deploys a chatbot to handle overwhelming customer service volume, resulting in fewer calls to agents, faster response times and lower costs. The metrics all improve… happy days!

But if volume is high because the product is confusing and customers aren’t getting what they need, the chatbot is just a bandage. The core issue has not been addressed, and now the organization has less incentive to fix the actual problem.

When the quick fix looks like progress, the real fix never happens.

A Diagnostic for AI Implementation Leaders

Before taking that successful pilot organization-wide, slow down and pressure-test your assumptions.

What are the boundaries of this system? Your initiative will go further than you think. Define what’s inside the system you’re changing – and what’s outside it that might still be affected. Set the boundary too narrow and you’ll miss critical dependencies. Set it too wide and you’ll get lost in complexity. The goal is to capture the key interactions without boiling the ocean.

Who gains and who loses if this succeeds? AI changes power dynamics. If your initiative benefits one group while burdening or threatening another, expect resistance. Recognize that resistance is most likely not coming from ignorance, but from rational self-interest.

What feedback loops will this create? Every AI system generates new information flows and potentially new automated actions. Some will reinforce the change you want. Others will counteract it. Think through how you’ll learn whether it’s working, how you’ll catch unintended consequences, and how you’ll adapt. If you don’t design the feedback loop, you won’t have one.

Where are the delays? Your pilot showed results in eight weeks, but some consequences take longer to surface. Maybe the team loses the skills to handle exceptions the AI can’t. Maybe faster approvals mean more errors that won’t show up until customers start complaining. Before you scale, ask what might go wrong that you won’t see for six months.

What assumptions are we making? All corporate initiatives rest on assumptions about human behavior: that employees will trust the recommendations; that customers will prefer the automated experience; that upstream teams will enter data correctly. These assumptions are usually invisible until they turn out to be wrong. Write them down and treat them as hypotheses to test, not facts to rely on.

How AI Transformation Leaders Think Differently

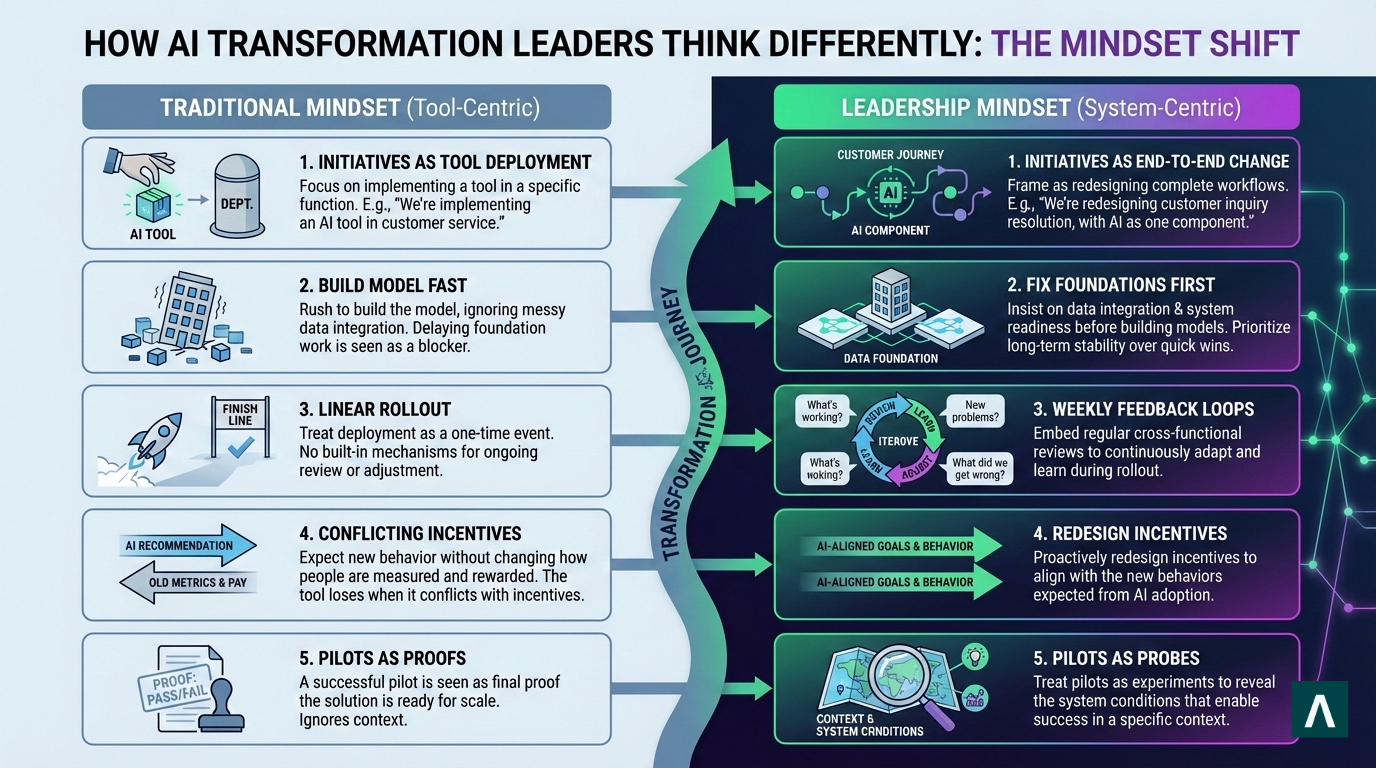

Successful AI transformation leaders take a long-term, system-centric approach

They frame initiatives as end-to-end change rather than tool deployment. Instead of saying “we’re implementing an AI tool in customer service” they say “we’re redesigning how customer inquiries get resolved, with AI as one component.”

They fix foundations first. If an AI project needs clean unified data, systems thinkers insist on data integration before building the model, even if it delays the exciting work.

They build feedback loops into rollout with weekly cross-functional reviews asking: what’s working, what’s creating new problems, what did we get wrong?

They redesign incentives before expecting new behavior. If a new AI tool recommends actions that conflict with how people are measured and paid, the tool loses every time.

They treat pilots as probes rather than proofs. A successful pilot doesn’t prove the solution is ready — it reveals what system conditions made success possible in that context.

Final Thoughts

AI transformation is 10% technology and 90% organizational change. The companies that figure this out will pull ahead while the rest keep running pilots that succeed in isolation and wonder why nothing scales.

Systems thinking won’t make transformation easy, but it will stop you from solving the wrong problems.

Need systems thinkers to help scale your AI transformation? Contact Axial Search